January 26th, 2011 — business, design, economics, philosophy, software, trends

David Lee Roth

“He who knows how will always work for he who knows why.”

– David Lee Roth

There are 168 hours in a week and you must decide how to spend them. You’ll probably want to spend some sleeping and eating. What will you do with the rest?

Many people that work with technology pride themselves on knowing how to do things the best way, with the best tools. In fact, the history of technology and its evolution is all about “how” and finding new, better ways to do things.

But in some important ways, “How” is the enemy of “Why.” Why should you do one thing instead of another thing? Why is it sometimes important to choose one technology over another? Some technologists would argue that it’s important to choose the better technology. Better for what?

After about age 15, I have always bristled when people called me a “tech guy.” And I wasn’t sure why. While I may be (on the best days) intelligent enough to pay attention to and use technology well, and maybe to have read a thing or two about algorithms and software, I always felt offended by the label. It was as if people were saying that I knew “how” to do things, but that I didn’t know why.

But I do know why. I’ve read enough philosophy, literature, and scripture to have a sense of what we should be doing on this earth. So calling me a “tech guy” feels wrong. I’m as much of a “why” guy as I am a “how” guy. They’re not mutually exclusive.

People who really know “why” often end up with real power and wealth. To save time, the “why” progeny formed a tribe. They go to the right schools and give each other important-sounding jobs. And they control many people who know “how” (but who may not yet know why.) Too often, though, the offspring of powerful people don’t really know “why.” They took a shortcut and there is none.

I spend a lot of time with tech people; in tech conferences; in the tech community. And many of those people know how to do a great many things. Fewer know “why.” Some have yet to realize it’s worth knowing. That’s OK, because learning why takes time.

It’s troubling to hear good, smart tech people get into the minutiae of a “how” question that doesn’t matter. (For me, home media usually falls into this category.) When I was younger, I might have had time to figure out the details of streaming movies to three televisions. Now I just don’t care. This is why Apple is making a fortune on its products. They generally deliver good results without requiring people to waste time on the details. (Steve Jobs knows both “why” and “how.”)

Here’s a challenge, tech people: learn “why.” And understand that “how” sometimes comes at the expense of “why.” You need to balance your priorities between both and choose how you’re going to spend your time each week. If you know only “how”, and never take the time to know “why,” rest assured you’ll be working for someone else who does.

As a tech-aware person you have a head start, because today it’s not enough to know only “why.” Someone who may know why but excludes technological study from their life can’t understand the world properly today because technology shifts so quickly. Sometimes things that once were important simply become obsolete.

Sometimes I talk to tech people who think they don’t have any real power because they are not part of the old-school power-tribe. But nothing is further from the truth, for inherited power is not real power.

No one has more power than someone who knows both “how” and “why.” Become that person and you change the world.

August 1st, 2010 — art, baltimore, business, design, economics, geography, philosophy, politics, software, trends

Putty Hill, a film by Matthew Porterfield (2010)

Something amazing is happening in the world of filmmaking. Crowdsourced funding mechanisms like Kickstarter.com are enabling a new generation of filmmakers to get a foothold doing what they love, where they want to do it. They’re using social media to find acting talent, and new digital camera technologies are making it possible to create amazing high quality films for a fraction of what it used to cost.

Matthew Porterfield

I’m particularly impressed by the work of Baltimore filmmaker Matthew Porterfield, whose films “Hamilton” (2006) and “Putty Hill” (2010) exemplify the new kind of “cinepreneurial” skillset which will certainly come to define 21st century filmmaking. (You can read here about the funding and creative process behind Putty Hill.)

Porterfield is a nice, unassuming guy who teaches film at Johns Hopkins and directs his students that if they want to make documentaries, they need to go to New York, and to go to Los Angeles for pretty much everything else. For today, this is sound advice. It’s the same kind of advice you’d give talented coders looking to unleash the next big web technology — go to San Francisco, because it’s where the industry is centered — at least right now.

But if you ask Porterfield why he doesn’t take his own advice, he’d likely offer a cryptic sort of answer — that he’d considered it but really couldn’t imagine himself anywhere else. I don’t know him well enough to speak for him, so I hope he weighs in here. But Matt and I are kindred spirits: we both are actively choosing place over anything else, and investing our time and talent to make it better.

Let’s Invest in Maryland Film, Not in Hollywood

Baltimore and Maryland have been the home to many well-known movie and television productions over the years, not the least of which have been Homicide: Life on the Street, The Wire, and a slew of Baltimore native Barry Levinson’s films including Diner, Tin Men, and Avalon. And most all of these productions received significant subsidies from the State of Maryland.

As budgets have continued to tighten, the O’Malley administration made a strategic decision to cut back on investment in film production subsidies. And that has probably been a very wise decision. Other states have been more than willing to outbid Maryland, offering ridiculous breaks. And Maryland really doesn’t need to be in yet another race to the bottom.

The Curious Case of Benjamin Button (2008)

The film The Curious Case of Benjamin Button (2008) was based on a short story by F. Scott Fitzgerald (who lived around the corner from me in Bolton Hill when he wrote it), and it was originally set in Baltimore (original text). Yet the film version was set in New Orleans and had a subtext about a dying woman retelling the story as Katrina bore down on the city. Why? Subsidies. New Orleans offered more subsidies than Maryland would. And so the story was changed and moved there. Who knows if the Katrina storyline was a condition in the contract!

I don’t really have an opinion about whether Benjamin Button should have been filmed in Baltimore, but I do have an opinion about engaging in zero-sum games with 49 other desperate states: it’s bad policy. And I also think the time has come to admit that big movie studios are the next big dinosaur to face extinction. Why should Sony or Disney or Universal make the bulk of the world’s content when every man, woman, and child has access to a $200 HD camera and a $999 post-production studio?

Investing in Cinepreneurs

John Waters is one of Baltimore’s great artistic assets. And it’s not because of film subsidies. His work is known worldwide, and it celebrates the quirky, distinctive voice of Baltimore. Matthew Porterfield is distinctive and quirky too, and he makes beautiful pictures: he’ll be next to make his mark. And there are dozens more teeming around places like MICA, the Creative Alliance CAMM Cage, Johns Hopkins, Towson University, and UMBC. We need only to nurture their talent and the ecosystem.

Browncoats: Redemption, 2010

Another film, Browncoats: Redemption was made locally last year and created by local entrepreneurs Michael Dougherty and Steven Fisher. It is utilizing an innovative non-profit funding model. The film’s is raising money for five charities and it leveraged social media and Internet to recruit 160+ volunteers and market the film.

Instead of blowing money on Hollywood productions that bring little more than short term contract and catering work to Maryland, why don’t we instead start investing in the artists in our own backyard? Just as IT startups have gotten much cheaper to jumpstart, it’s now possible to make films for anywhere from $50 to $150K. If we dedicate between $5M and $7M to matching funds raised via mechanisms like Kickstarter, we could make something like 150 to 300 feature length films here in Baltimore. This would unleash a new wave of creativity that would yield fruit for decades to come, and put Maryland on the map as a destination for filmmakers.

We already have great supporters of film in the Maryland Film Festival, Creative Alliance, and many other organizations. It wouldn’t take much to get this off the ground. Instead of going backwards to the 1980’s in our view towards film production (as former Governor Ehrlich has recently proposed), let’s take advantage of all the available tools in our arsenal to jumpstart the film industry and move it forward in Maryland.

For every new artistic voice we nurture, we’ll be building Maryland’s unique brand in a way that no one else can compete with. It will make an impression for decades. And investing in film and the arts will help the technology scene flourish as well. Intelligent creative professionals want to be together. And coders and graphic artists think film and filmmakers are pretty cool.

We shouldn’t let an aversion to the failed subsidy policies of the past get in the way of forging a new creative future that we all can benefit from. We can invest in the arts intelligently. Let’s start today.

March 30th, 2010 — art, business, design, economics, mobile, philosophy, software, trends

The iPad promises to be a very big deal: not just because it’s the next big over-hyped thing from Apple, but because it fundamentally shifts the way that humans will interact with computing.

Let’s call this the “fourth turning” of the computing paradigm.

Calculators

Early “computers” were electro-mechanical, then electric, and then later all electronic. But the metaphor was constant: you pushed buttons to enter either values or operators, and you had to adhere to a fixed notation to obtain the desired results. This model was a “technology” in the truest sense of the word, replacing “how” a pre-existing task got done. It didn’t fundamentally change the user, it just made a hard task easier.

8-Bit Computers: Keyboards

The early days of computing were characterized by business machines (CP/M, DOS, and character-based paradigms) and by low-end “graphics and sound” computers like the Atari 800, Apple II, and Commodore 64.

The promise here was “productivity” and “fun,” offering someone a more orderly typewriting experience or the opportunity to touch the edges of the future with some games and online services. But the QWERTY keyboard (and its derivatives) date back to at least 1905. And the first typewriters were made by Remington, the arms manufacturer.

The keyboard input model enforces a verbal, semantic view of the world. The command line interface scared the hell out of so many people because they didn’t know what they might “say” to a computer, and they were often convinced they’d “mess it up.” During this era, computing was definitely still not a mainstream activity.

More of the population was older (relative to computing) and had no experience with the concepts.

The Mouse, GUI, and the Web

Since the introduction of the Macintosh, and later Windows, the metaphors of the mouse, GUI, and the web have become so pervasive we don’t even think about them anymore.

But the reality is that the mouse is a 1970’s implementation of a 1950’s idea, stolen by Apple for the Lisa from Xerox PARC. Windows is a copy of the Macintosh.

The graphical computing metaphor, combined with the web, has opened the power of the Internet to untold millions, but it’s not hard to argue that we’re all running around with Rube Goldberg-like contraptions, cobbled together from parts from 1905, 1950, and 1984 respectively. Even so, the mouse alone has probably done more to open up computing than anything else so far.

The mouse enforces certain modes of use. The mouse is an analog proxy for the movement of our hands. Most people are right handed, and the right hand is controlled by the left hemisphere of the brain, which science has long argued is responsible for logic and reason. While a good percentage of the population is left handed, the fact remains that our interactions with mice are dominated by one half of the brain. Imagine how different your driving is when you only use one hand.

While we obviously use two hands to interact with a keyboard, some cannot do that well, and it continues a semantic, verbal mode of interaction.

iPad

The iPad will offer the first significant paradigm shift since the introduction of the mouse. And let me be clear: it doesn’t matter whether hardcore geeks like it now, or think it lacks features, or agree with Apple’s App Store policies.

The iPad will open up new parts of the human brain.

By allowing a tactile experience, by allowing people to interact with the world using two hands, by promoting and enabling ubiquitous network connections, the iPad will extend the range and the reach of computing to places we haven’t yet conceived.

Seriously. The world around us is reflected by our interactions with it. We create based on what we can perceive, and we perceive what we can sense. The fact that you can use two hands with this thing and that it appears to be quick and responsive is a really big deal. It will light up whole new parts of the brain, especially the right hemisphere — potentially making our computing more artistic and visual.

Just as the mouse ushered in 25 years of a new computing paradigm, pushing computing technology out over a much larger portion of the market, the iPad marks the beginning of the next 25 years of computing.

And before you get worried about how people will type their papers and design houses and edit video without traditional “computers,” let me answer: no one knows. We’ll use whatever’s available until something better comes along.

But computing platforms are created and shaped by raw numbers and the iPad has every opportunity to reach people in numbers as-yet unimagined. That will have the effect of making traditional software seem obsolete nearly overnight.

When the Macintosh was released, it was widely derided as a “toy” by the “business computing” crowd. We see how well that turned out.

This time, expect a bright line shift: BIP and AIP (before iPad and after iPad). It’s the first time that an entirely new design has been brought to market, answering the question, “Knowing everything you know now, what would you design as the ultimate computer for people to use with the global network?”

It’s 2010, and we don’t need to be tied down to paradigms from 1950 or 1905. Everything is different now, and it’s time our tools evolved to match the potential of our brains and bodies.

November 7th, 2009 — art, baltimore, business, design, geography, philosophy, trends

In 1983 at age 12, I became drawn to the design and tech culture of San Francisco. By that time I was already deeply involved in computers and the other tech of the day, and had been reading every issue of BYTE Magazine cover-to-cover when it arrived in our mailbox after school.

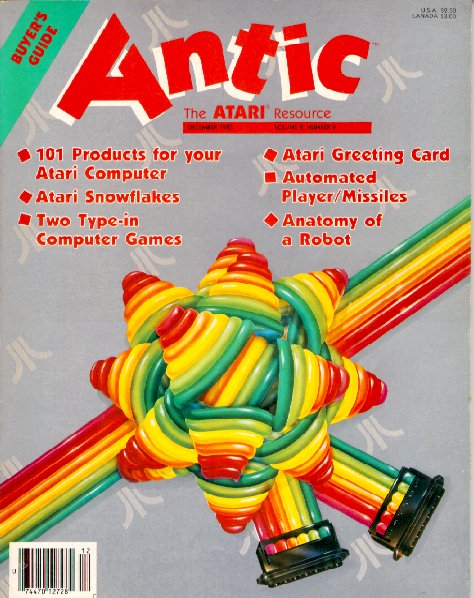

BYTE was produced in New Hampshire and had a scholarly tone; still, the emerging world of computing was breathlessly covered, and offered a sense of endless possibility. But it was Antic magazine (a specialty computing magazine for Atari computers), specifically the December 1983 “Buyer’s Guide” issue that really caught my eye.

The design was colorful and imaginative, with beautiful typography, and the magazine was full of amazing ideas and products which I was sure would launch me on my way to unlimited exploration. I devoured the magazine cover to cover, but I never realized just how much I was soaking up its design ethos. Colorful, playful, and bold, this was not the wry, academic BYTE. It was combining the substance of tech with the emerging design scene in San Francisco, and it resonated with me profoundly.

In 1985, I got a job at a local computer store doing what I loved: selling computers and software and, yes, copies of Antic magazine. In 1986, I started my own computer and software sales company, Toad Computers. In 1989, months after graduating from high school, I had the chance to visit Antic Magazine — this time as an advertiser.

This was my first trip to San Francisco and I visited Antic at their loft office, located at 544 Second Street, right in the heart of the city’s SOMA district. But this was SOMA before it was the SOMA we know now as the home of so many startup tech companies. Beat up and edgy, the open-air second floor office had high-beamed ceilings and gave a sense of history and limitless potential. I was smitten with the city and with valley tech culture – I also visited Atari’s headquarters in Sunnyvale that trip – and absorbed all that I saw.

Later in 1993, I was twenty-one and searching for new things to explore. Toad Computers was doing well but I knew that it would have to change and grow to survive. Atari was having tough times. Antic magazine had folded. To advertise effectively we were sending out massive catalog mailings, featuring 56 page catalogs that I personally designed – very much in the visual style of Antic magazine.

Someone had told me about a new magazine called Wired. I picked up a copy and was immediately struck with its sense of visual design and its aura of infinite possibility through the combination of design and tech. Again, I ingested every word, photo, and illustration in each issue. In early 1994, I noticed an ad that indicated that Wired – this tiny publishing startup – was looking for a circulation manager. I was entranced at the possibility. With my background in direct marketing and managing big catalog mailing lists, I thought this might be an opportunity for me.

In February 1994, I booked a trip to San Francisco to talk to my kindred spirits at Wired about the possibility of working there. I also became entranced with the Internet and its possibilities at this time, and for several days before my trip to San Francisco, I worked feverishly to write an article for Wired about how the Internet – when it became fully developed and evolved – could become a kind of real-time Jungian web of knowledge that acted like a global brain cheap kamagra oral jelly uk. I theorized that the Internet could become a kind of collective consciousness that enabled humanity’s genius to be available to everyone all the time. I predicted online banking, shopping, and video chat and made illustrations to show how these things would work.

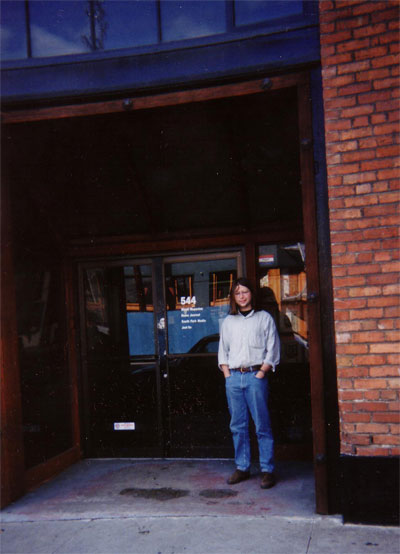

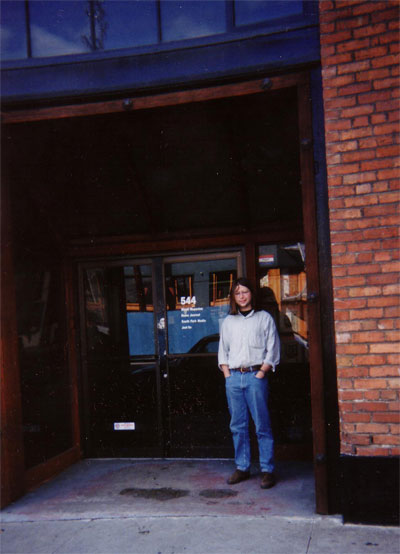

Me, with long hair, at Wired HQ in February 1994

Of course, the simple things were not hard to predict at that time, though they were still a few years off. But my central thesis about Jungian synchronicity was just too wacko to print in 1994. And to be fair, I had cobbled the article together in just a couple of days, had worked in ample quotes from Marshall McLuhan and Carl Jung, and had interviewed no one. My thesis may have been strong, but the piece would have benefited from some interviews and editing. But hey, I was inspired and twenty-two.

When I went to Wired’s offices, I was stunned to learn that they were located in the same office that Antic had occupied! The same open air loft office at 544 Second Street. I met with some folks from Wired’s barebones staff. I commented on my perceived sense of Jungian synchronicity — about Antic and Wired sharing the same office space. We talked about job possibilities. I submitted my article.

I didn’t get a job, and they didn’t print my article. To be fair, I wasn’t really ready to move to San Francisco, and I am sure they sensed that. I also wasn’t sure what I wanted. I just knew that I was drawn to this hopeful admixture of design and tech that seemed to emanate, radio-like, from 544 Second St.

In March 2007, two weeks after I had built Twittervision and a week after SXSW launched Twitter onto the early adopter stage, I thought it would be fun to stop by Twitter HQ in San Francisco. I met Biz and Jack and Ev, and was once again amazed to see that something I had been drawn to had come from SOMA; just a few blocks from 544 Second St. And ironically, it is now Twitter and the “Real Time Web” that is beginning to enable the kind of global consciousness that I had predicted in 1994.

This past Thursday at TEDxMidAtlantic (of which I was the lead organizer and curator) in Baltimore, I was struck by the beautiful design of our stage set. (Thanks to Paul Wolman at Feats, Inc. for bringing it together for us!) A simple combination of bookshelves, cut lettering, books, a few objects and blue wash backlighting had combined to produce a gorgeous backdrop for the extraordinary ideas that our speakers would soon be sharing. And I felt at home. I could not go to 544 Second Street and SOMA. Instead, it was my mission to bring it here.